OpenAI is one of the most influential and secretive AI research organizations in the world. Founded in 2015 by a group of tech luminaries, including Elon Musk, Peter Thiel, and Reid Hoffman, OpenAI aims to create artificial general intelligence (AGI), a level of AI that can perform any task that a human can. OpenAI also claims to be aligned with the vision of creating a positive impact on humanity and avoiding the pitfalls of AI misuse or abuse.

But is OpenAI really safe? As the organization grows more powerful and ambitious, some critics and observers have raised concerns about its transparency, accountability, and ethics. In this article, we will explore some of the controversies and challenges that surround OpenAI and its quest for AGI. We will also examine some of the potential risks and benefits of OpenAI’s projects and products.

Contents

Is OpenAI Safe?

There is no definitive answer to whether OpenAI is safe or not, as different people may have different opinions and perspectives on what constitutes safety and risk in AI.

It depends on who you ask. Some people think OpenAI is safe because it has a charter, safety measures, and collaborations to ensure that its AI systems are beneficial and trustworthy. Others think OpenAI is not safe because it is secretive, ambitious, and potentially risky in its quest for artificial general intelligence (AGI). There are many factors and perspectives to consider when evaluating OpenAI’s safety.

After reading this article, I am sure that you will be in a position to better evaluate the answer to your query- Is OpenAI safe? So let’s jump straight to the topic.

To come to a conclusion that whether OpenAI is safe or not you have account various factors into consideration. All such factors have been discussed here in detail.

Potential Risks Associated with OpenAI

Some of the arguments against OpenAI’s safety are:

- OpenAI privacy concern. OpenAI is secretive and lacks transparency about its research, goals, and funding. It also has a lot of influence and power in the AI field, which may pose a threat to democracy and accountability.

- OpenAI aims to create artificial general intelligence (AGI), a level of AI that can perform any task that a human can. This may lead to existential risks for humanity if AGI becomes misaligned with human values or surpasses human intelligence.

- OpenAI’s products and projects, such as GPT-3, DALL-E, Codex, and RoboX, may have negative impacts on society, such as displacing human workers, spreading misinformation or propaganda, creating harmful or offensive content, or enabling cyberattacks or warfare.

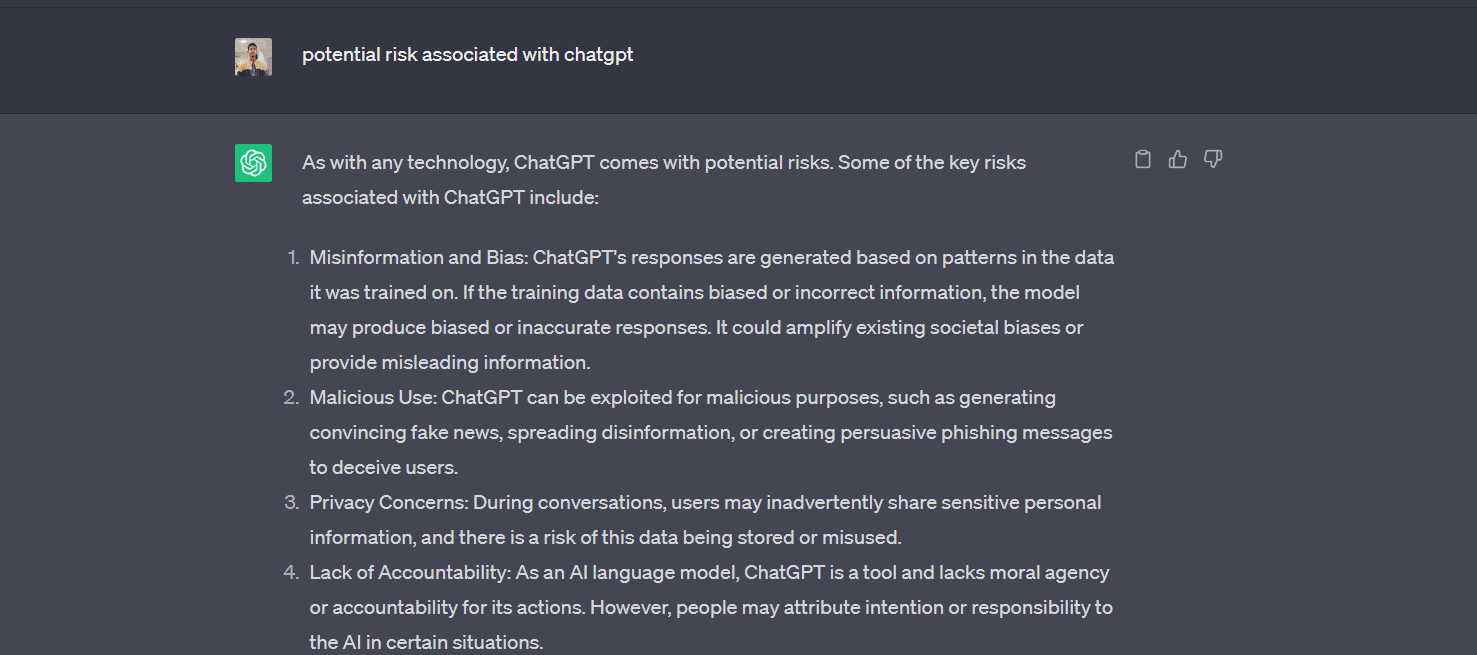

Potential Risks of OpenAI’s ChatGPT

The potential risks of OpenAI’s ChatGPT bot. In this section, we will try to analyze the potential risk associated with OpenAI by analyzing its latest and most advanced AI discovery which is ChatGPT.

From generating fake news to manipulating human behavior, the capabilities of this technology are not to be taken lightly. OpenAI’s new ChatGPT bot is capable of many things, some of which pose potential risks to society.

Manipulation of human behavior

One of the most dangerous things that OpenAI’s ChatGPT is capable of is manipulating human behavior. By analyzing vast amounts of data on individuals, it can learn their patterns and preferences and use this information to influence their decision-making.

Spreading fake news

OpenAI’s ChatGPT can generate highly convincing fake news articles, which can be used to spread misinformation and sow discord in society. With the ability to generate large quantities of content at a rapid pace, the potential for harm is significant.

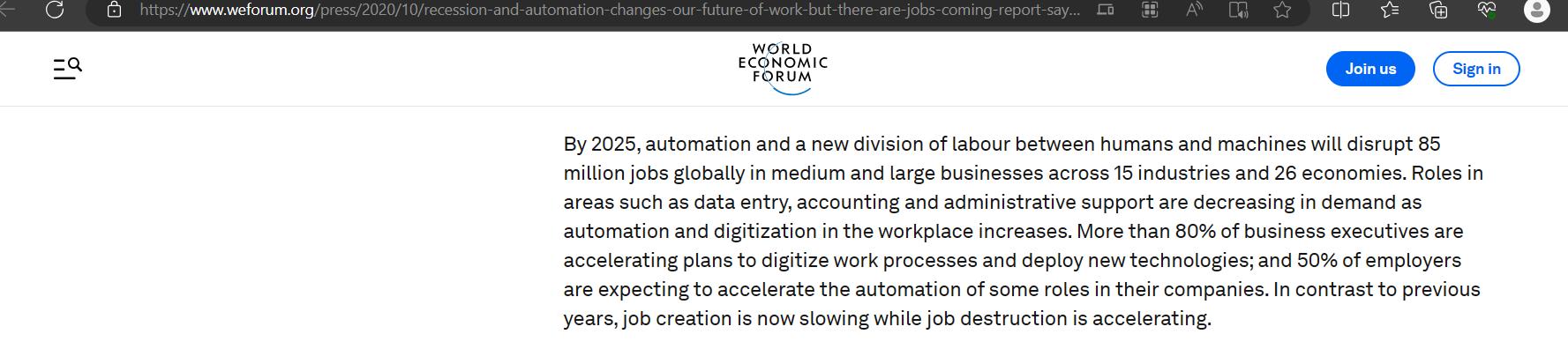

Displacement of jobs

The rise of advanced AI systems developed by OpenAI has raised concerns about potential job displacement. The increasing sophistication of AI technology presents a real risk of automating many jobs currently performed by humans.

The World Economic Forum’s 2020 report predicts that by 2025, around 85 million jobs could be displaced due to AI, while at the same time creating approximately 97 million new jobs. It is a known fact that the development of AI could lead to significant changes in the job market, which will require a shift in the workforce’s skill set to adapt to the new job roles that will emerge as a result.

Cyberattacks and hacking

The technology used in OpenAI’s ChatGPT can also be used for cyberattacks and hacking. With its ability to analyze vast amounts of data and find patterns, it can be used to identify vulnerabilities in computer systems and launch attacks.

Privacy concerns

The capabilities of OpenAI’s ChatGPT also raise significant privacy concerns. By analyzing vast amounts of data on individuals, it can learn personal information that individuals may not wish to share. This can lead to significant privacy violations and risks to personal safety.

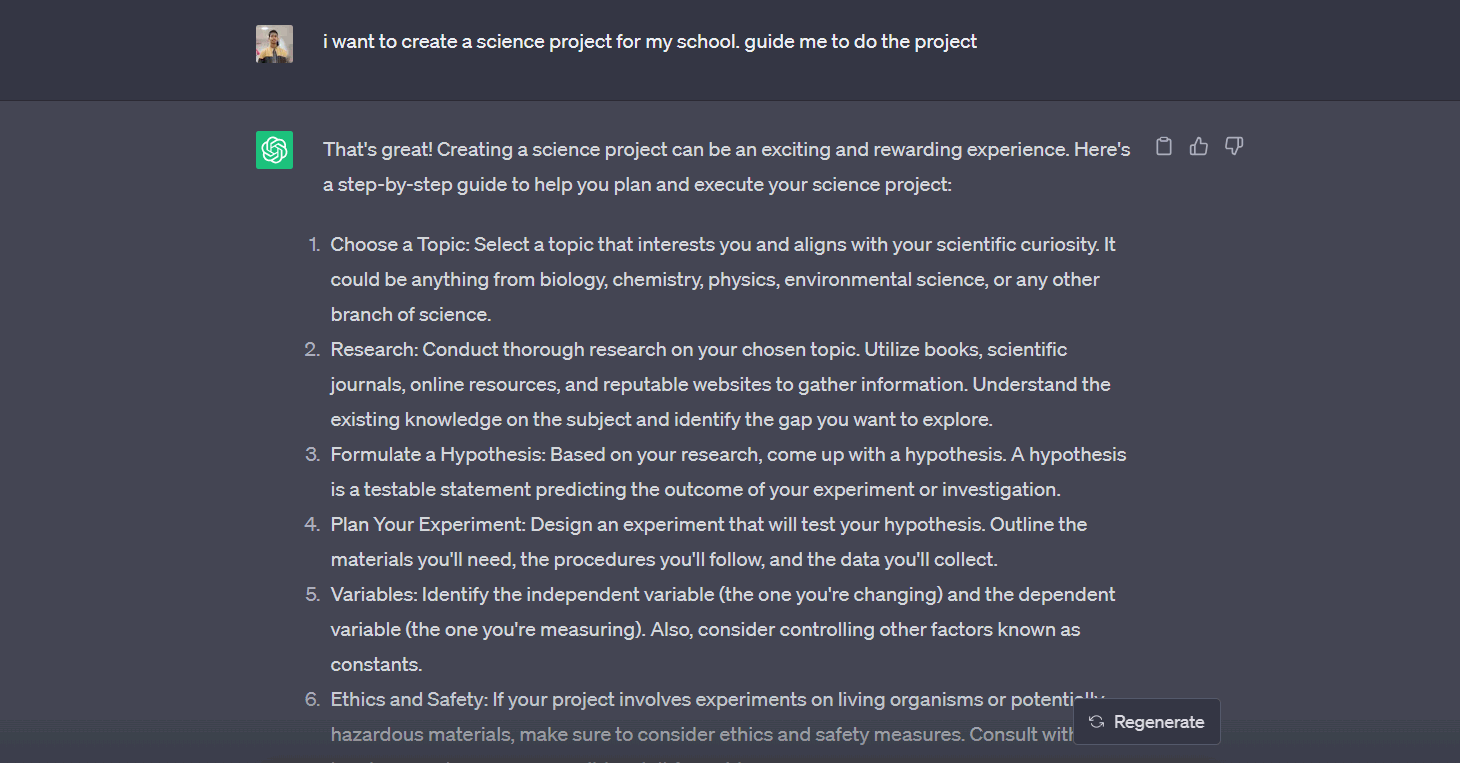

Now some of you might have this question that should students use the AI tools developed by OpenAI? Will it be safe for students or Will it make the students lazy?

Is OpenAI Safe For Students?

Yes, OpenAI is safe for students as long as they use the AI tools they offer in a responsible and appropriate way.

Some of the AI tools developed by OpenAI that can help students along with their potential risks are given below:

- ChatGPT: A chatbot that uses a powerful language model called GPT-4 to generate natural and engaging conversations. ChatGPT can help students with their learning such as:

- Providing information, feedback, and guidance on various topics

- Explaining the complex questions and possible ways to solve the problem

- creative ideas for their projects and assignments and many more.

However, ChatGPT may not always give accurate or reliable information. So students should use it as a helper or a friend, not as a teacher. They should always check the source and accuracy of the information they get from ChatGPT, and compare it with other sources that can be trusted.

- DALL·E: An image model that can generate and edit novel images and art based on user input. DALL·E can help students with their creativity and expression by allowing them to create and manipulate images of anything they can imagine. However, DALL·E also comes with some limitations, such as resolution, quality, or diversity of the generated images.

- Whisper: An audio model that can transcribe speech into text and translate many languages into English. Whisper can help students with their communication and comprehension by enabling them to access and understand audio content in different languages. However, Whisper also comes with some challenges, such as accuracy, latency, or availability of the supported languages.

- OpenAI Gym: A library of reinforcement learning environments that can help students learn and practice reinforcement learning algorithms. OpenAI Gym provides a variety of tasks and challenges that range from classic control problems to Atari games to robotics simulations. However, OpenAI Gym also requires some programming skills and familiarity with reinforcement learning concepts.

- OpenAI Baselines: A library of pre-trained reinforcement learning algorithms that can help students compare and benchmark different algorithms and environments. OpenAI Baselines provides implementations of state-of-the-art algorithms that are compatible with OpenAI Gym environments. However, OpenAI Baselines also require some computational resources and technical knowledge to run and modify the algorithms.

These are some of the AI tools that OpenAI has developed and they are safe for students as long as they use them wisely and responsibly. Students should not rely on these tools for everything but rather use them as a supplement and an aid for their learning.

In the next section, we will explore OpenAI’s approach to safety and its commitment to developing AI in a responsible manner.

OpenAI’s Approach to Safety

To address the query: Is OpenAI Safe? One of the key ways that OpenAI is working to make it safe is through the development of safety frameworks and ethical guidelines. OpenAI has published a number of research papers and articles outlining its approach to safety, which includes a focus on developing AI systems that are transparent, secure, and accountable.

In addition, OpenAI has taken steps to ensure that its AI systems are developed in a responsible and transparent manner. For example, the organization has made many of its research papers and datasets publicly available, allowing researchers and the wider community to review their work and provide feedback.

Another way that OpenAI is addressing safety concerns is through its work on building secure and transparent AI systems. The organization is actively researching ways to develop AI systems that are secure from potential cyber-attacks and other forms of malicious activity. They are also working to develop systems that are transparent, allowing researchers and others to understand how the system is making decisions and identify potential biases.

Criticisms of OpenAI’s Safety Measures

While OpenAI’s approach to safety is commendable, it is not without its criticisms. One of the main criticisms of OpenAI’s approach to safety is that its safety frameworks are not comprehensive enough. Critics argue that more needs to be done to address the complex ethical and safety issues associated with AI.

There are also concerns about the limitations of ethical guidelines in addressing complex AI safety issues. While ethical guidelines can provide a useful framework for addressing some safety concerns, they may not be sufficient to address all of the potential risks associated with AI.

Finally, there are concerns about OpenAI’s level of transparency and whether they are doing enough to involve the wider AI research community in their safety efforts. Critics argue that OpenAI needs to do more to collaborate with other organizations and individuals working in the field of AI in order to ensure that their safety efforts are as comprehensive and effective as possible.

Conclusion

In conclusion, OpenAI is a safe organization that is pushing the boundaries of what is possible with artificial intelligence. However, OpenAI’s quest for AGI may also pose significant risks for society, both in the near and long term. But it is important to note here that, with any new and powerful AI tools such as Nightcafe, Midjourney, ChatGPT, etc, there are inherent risks and concerns associated with its development and implementation.

So Overall, OpenAI’s progress in developing advanced AI technology is impressive, and they are taking steps to address safety concerns. However, it is important to continue to monitor their progress and work to mitigate any potential risks associated with this powerful technology.

As you can see, there are many factors and perspectives to consider when evaluating OpenAI’s safety. Ultimately, the answer may depend on your own values, beliefs, and expectations about AI and its impact on the world. What do you think? Is OpenAI safe? Why or why not?

Below given are some of the frequently asked questions related to: Is OpenAI safe?

Frequently Asked Questions

Is it safe to give your phone number to OpenAI?

According to OpenAI, it is safe to give your phone number to OpenAI. They require your number for signing up on their platform for security purposes only. This is done just to verify your account and maintain the security of the platform. They have also confirmed that their phone number will not be used for any other reasons, and privacy is taken very seriously.

Is the OpenAI API Safe to Use?

Yes, it is safe to use OpenAI API safe to use. OpenAI claims to take security seriously and implements several measures to protect its API from malicious actors. According to their website, the OpenAI API undergoes annual third-party penetration testing, which identifies security weaknesses before they can be exploited. Moreover, OpenAI has experience helping its customers meet their regulatory, industry, and contractual requirements (e.g., HIPAA).

OpenAI Approach To Secure their API

OpenAI also has a comprehensive approach to AI safety, which they describe in detail in a blog post. Their approach includes the following elements:

- Rigorous testing: Before releasing any new system, they conduct extensive testing to ensure its functionality and reliability.

- External feedback: They engage external experts for feedback and suggestions on how to improve their models and systems.

- Reinforcement learning with human feedback: They use reinforcement learning techniques to train their models with human feedback, which helps them align their models with human values and preferences.

- Broad safety and monitoring systems: They build safety and monitoring systems that cover various aspects of their models and systems, such as content moderation, bias detection, error correction, etc.

- Real-world use and learning: They cautiously and gradually release their models and systems to a broadening group of users and learn from their real-world use cases and behaviors. They also make continuous improvements based on the lessons they learn.

- Misuse prevention and mitigation: They work hard to prevent foreseeable misuse of their models and systems before deployment, but also recognize that they cannot predict all the possible ways people will abuse them. Therefore, they monitor for and take action on misuse, and build mitigations that respond to the real ways people misuse their systems.

Potential Challenges and Uncertainties To OpenAI API

Despite their efforts to ensure security and safety, OpenAI also acknowledges that there are limits to what they can do to prevent and mitigate all the risks and challenges associated with powerful AI systems. They admit that they cannot foresee all the beneficial or harmful ways people will use their technology, nor all the unintended consequences or side effects that may arise. They also state that regulation is needed to ensure that powerful AI systems are subject to rigorous safety evaluations and that they actively engage with governments on this issue.

Therefore, it can be said that using OpenAI API is safe. But users of the OpenAI API should be aware of the limitations and risks of this technology, and use it responsibly and ethically.

Should I give ChatGPT my phone number?

Yes, you will have to enter your phone number if you want to sign in to use ChatGPT. But the phone number is just a part of the verification process. OpenAI has publicly announced that users’ phone numbers will not be used for any other purpose and they will take care of the user’s privacy seriously.

Hey there! I’m Kuldeep Kumar, and tech is my jam. From the mind-blowing world of AI to the thrilling battlegrounds of cybersecurity, I love exploring every corner of this amazing world. Gadgets? I geek out over them. Hidden software tricks? Bring ’em on! I explain it all in clear, bite-sized chunks, laced with a touch of humor to keep things sparky. So, join me on this tech adventure, and let’s demystify the wonders of technology, one blog post at a time.

13 thoughts on “Is OpenAI Safe? Everything You Need To Know”